This is a page showcasing Virtual Reality (VR) storytelling & experiential projects using tools such as 3D scanning, photogrammetry, motion capture, meta human construction, world building, Blender, Reality Capture, Motive, and Unreal Engine, etc., to bridge the gaps between the existing reality and our imagination, memories, or to create alternate worlds altogether.

I.

KARA - A VR EXPERIENCE

a commentary on love in the age of a technological revolution

A screen recording of a VR experience. With a headset one could experience him/herself in the club environment, hear the music as if immersed in the space, feel the vibration of the phone, grab it, throw it away, teleport to move through space, activate a response to move the experience forward. All of these decisions / actions were part of the learning and design process.

Description:

This project started as an 8 level storyline (see below) and in the last week, before the final, had to be re-conceptualized to still make sense as a story and have a multi-sensory dimension to it. Ericka Njeumi, a colleague and multimedia filmmaker, collaborated with me on this project to polish the concept, write / redo the script, and design the iPhone visuals. To go into detail with the amount of challenges this VR project entailed is to use all the characters available for a blog post and then some, so I’ll just say, after many long nights, tears, and anxiety attacks - it failed, I didn’t have a project to present. During winter break, I had it corrected this is its latest iteration.

Ericka and I thought (1) to create an anthropomorphized representation (bringing the elements of an app into reality) of the virtual dating space that portrays ‘love’ as an easy matter (social media is easy thus love is easy); and secondly (2) to reflect how this age of technology affects the way we love and communicate.

RESEARCH:

Turns out, after doing more research, experts say that technology, while may affect the way we court (emailing, texting, emojis, liking a photo, selfies, etc.), doesn’t necessarily change the way we love and who we choose to love.

Helen Fisher, a biological anthropologist, says that: “The 3 brain systems for mating and reproduction situated deep into our limbic system, our most primitive part of the brain where we generate and feel our emotions, together with other parts of the brain orchestrate our sexual, romantic and family lives (they are responsible for sex drive, feeling intense romantic love, and feeling of deep cosmic attachment to a long term partner), have evolved over 4.4 million years ago amongst our first ancestors and they are not going to change if we sweep left or right. We may sit on a park bench, in a coffee shop, etc. and our ancient brain may snap into action like ‘a sleeping cat awakened’ and we smile, listen, and parade the way our ancestors did 100.000 years ago. We can be given various people, all the dating sites, but the only algorithm is our own human brain."

According to her, we also have 4 different broad styles of thinking and behaving concerning dopamine, serotonin, testosterone, and estrogen systems. After many studies she conducted, it turns out that those who are very expressive of the dopamine system (curious, creative, spontaneous, energetic) are drawn to people like themselves. Those expressive of the serotonin system - tend to be traditional, respect the authority, follow the rules, and are religious - go for traditional. In these two cases similarity attracts. In the other two cases - the opposite attract. Very expressive of the testosterone system tend to be analytical, logical, direct, decisive and they go for the opposite — very good verbal skills, people skills, very intuitive and nurturing, and emotionally expressive. “These are natural patterns of mating choice and modern technology won’t change who we choose to love,” says Fisher.

However, in her observations, modern technology is producing an important trend, a concept associated with the paradox of choice. We didn’t use to have this opportunity to choose someone from thousands of people on dating sites. It seems that there is a sweet spot in our brain where we can embrace 5-9 alternatives and after that we get into a “cognitive overload”, and this seems to bring us to a new way of courtship that is called “slow love”- a term coined by Fisher. “In an age where we have too many choices,” she says, “where we have very little fear of pregnancy and disease, we have no feelings of shame for sex before marriage, people are taking their time to love. What we see is an extension of the pre-commitment stage before you tie the knot. The marriage used to be the beginning of the relationship, now is the finale.”

The human brain always triumphs, she adds. In US, 86% of Americans would marry by age 49, and it other countries worldwide where people wouldn’t get married as often, many would settle down with a long term partner. “Perhaps this pre-commitment stage could be a way to eliminate bad relationships and increase the rate of happy marriages,” she says. In another study she interviewed about 11.000 married people in US and asked if they would remarry the person they are currently married to and 81% said - yes.

Yet, with all these findings, her conclusion is that the greatest change in modern romance and family life is not technology, not even “slow love,” but women filing up the job market in cultures around the world. “We are now in a marriage revolution, we are shedding 10.000 years of our farming tradition and moving forward towards egalitarian relationships between sexes - highly compatible with the ancient human spirit.”

She concludes her talk by saying “love and attachment will prevail. Technology cannot change it. Any understanding of human relationships must take into account one of the most powerful determinants of human behavior- the unquenchable, adaptable, and primordial human drive to love.”

PROJECT SPECIFIC SKILLS + CHALLENGES:

learned how to use Unreal Engine as a creative platform for storytelling: materials, functionality, possibilities, world building, blueprints, etc.

created 3D virtual worlds; created, modeled, and animated 3D characters, used blueprints to trigger / inactivate actions, used timelines and levels for transitions and easier workflow;

incorporated videos, images, audio as well as 3D characters and activated/animated them in 3D space;

used the VR Pawn as a first person character, camera movements, grabbing / releasing functions applying gravity principles;

used the Oculus Quest 2 for playing VR experiences in the headset;

added multi sensory (non VR) elements to projects (wind, scent, taste, tactile) for a more immersive experience;

learned to make people comfortable and fully immerse into a virtual environment when one of the most important senses (sight) used as a way of awareness, trust, and safety is obscured; provided on-boarding sessions, assistance, and guidance throughout a live experience;

learned a new medium for storytelling with a tool where many of the traditional storytelling elements are not accessible: how do we make someone feel like they are moving through space, allow the viewer to make decisions and affect the arc/narrative of a story, design the blueprints in a way that gives the participant the possibility to act and in a way that is intuitive without requiring much guessing on the participant’s part, etc.

INITIAL PROJECT PROPOSAL

The story explores love as the answer to the character’s quest of overcoming a mysterious and sudden psychological dive into a world of darkness and despair.

In a reality increasingly shaped by superficial values [driving forces - work, money, greed, fame, power, sex, survival], unrealistic expectations of culture [challenges - what is celebrated and what is looked down on, the pressure on the individual to be more, to suppress, to fake it], and chronic separation [consequences - isolation, loneliness, despair] rises the question of what can save us from nihilism and self-destruction, as well as restore balance within us and others.

The storyline follows the character’s journey through a set of experiences forced upon her to seek another answer to what makes life worth living [the purpose of life] and concludes with the celebration of her emerging out of it with a renewed sense of being.

storyboard & Script

2. PROCESS:

Avaturn: 3D Character using an AI generated image.

Source: Unreal Engine Marketplace

Figma: Video of the iPhone scene.

2.1 The next step in the process, after storyboarding and writing the script, we needed to generate all the materials, characters, sounds, voices, etc. that would then be used to build the videos or materials to be used as a screen cover, such a big LED screen in the disco. We used AI generating software such as Runway, Stable Diffusion, Eleven Labs, royalty free sound libraries and YouTube to outsource all the prime materials.

StableDiffusion: AI generated images for iPhone interface used in the VR storyline.

2.2 Then we needed to generate the characters and appropriate animations according to our script. For this project we didn’t use Mocap to record characters and animate them due to limited time for delivery. Instead we used Avaturn and Mixamo as an alternative, modeling the characters by changing parameters in real time and based on an existing photo. We could also choose what animations they would come with.

Avaturn: self portrait generated character animation.

Mixamo: sample animation using a 3D scan of myself.

2.3 Due to time constraints (this was a 7-week project) I looked to outsource the worlds to save time on building the environments for each scene. The idea was to modify the elements within the scene on my own and add characters to it, focusing on the interaction part. The worlds came with their own challenges, some of them included just the assets (not a complete world build), some were extraordinarily heavy files or the lights complexity making rendering impossible and time consuming to the point of failing the engine again and again, blocks that were hard to reverse engineer or work around them because the moving through space or teleporting was not possible after certain thresholds, and more. After spending weeks on figuring out why the engine would collapse and cleaning up the first scene (photo studio) to make it manageable, it was time to decide which world would we focus where an entire story could be designed around, and that was the clubbing scene.

2.4 Once Ericka and I settled on working on the final story, we started generating the specific content needed for each interaction.

I prepared all the files Ericka needed to design the interfaces needed on the iPhone scene (AI images, sounds, AI generated voices, and 3D assets).

Ericka then designed in Figma the iPhone content (phone calling with a photo ID, ring of the phone, voicemail interface, voice recording when phone was answered, social media screen with AI generated images, etc.) She made versions with the mouse and w/out the mouse so we could have choices on how we’d use the controllers in the VR world.

I took the final renderings and continued to edit them in Photoshop and Premiere Pro for a final video that would be used as a material for the iPhone asset. The content needed to be timed appropriately to the script and matched with phone ring, AI voice of the character, environment sounds, etc., and the video format needed to be adjusted to iPhone asset we used because the mapping onto it was not accurate even if used a plane to map it on, the image was distorted, it was covering up spaces of the phone that made it look unrealistic (speakers and edges). It required masking skills in PS6.

Premiere Pro: Final video with sound. The black area around the iPhone was masked out for final output in PS6.

2.5 Back to Unreal Engine. All the prime materials were ready (AI generated voices, sounds, music, characters, animations). So far, things lined up and I was able to bring in the final video and map it onto the phone in Unreal Engine. When it came to build the interactions (the first one was grabbing the phone) it is when the challenges started to amount against time. I couldn’t figure out why grabbing the phone was not working. I could reach it but couldn’t grab it. Followed countless tutorials, spent nights at school before the final, had office hours with virtual production instructors, and I still couldn’t move past this roadblock. We added a collision sphere to the VR Pawn, tried all the settings in the menu, and nothing. Turns out, it was a setting in the VR Pawn that needed to add a collision sphere to the controllers as well.

From there on it was about setting the blueprints to activate sounds at certain action points, to use distance triggers to open doors (those need unblocking from the original build), adding functions to the characters to simulate swiping left/right as one would do on a dating app in the real world.

It may seem like a simple project with not much complexity to it, but for someone learning a new platform, and having only one week to figure out the story, the assets, and the technical part work in synchrony, was an impossible task, especially when in an engine like Unreal, one setting may make all the difference and have the power to stop you on your tracks even at the most simple challenge.

SKILL BUILDING & Learnings:

1. Foundational: Unreal Engine, Steam, Epic Games (Virtual Reality 3D World Building, Blueprints, Oculus Quest 2, etc.)

2. Auxiliary Software: Polycam (3D object / 3D spaces Scanning); Reality Capture (Photogrammetry); Blender (3D Modeling), Metahuman and Mixamo (Character Modeling / Animation); Stable Diffusion (AI generated images); Eleven Labs (AI generated voice), Royalty Free Sound Platforms, Unreal Engine Marketplace, OSC Screen Recording, Celtx (Screenwriting), Otter AI (AI generated text), Photoshop, Premiere Pro, Figma (iPhone interface).

3. Storytelling Skills: developing storytelling concepts / storyboards for VR, multi-sensory experiences augmenting the VR to create more immersive experiences, script writing and strategizing workflow, team collaboration based on strengths, time and resource management.

4. Other: being creative, diligent, and persistent to work under stress and against time, multitasking, researching through countless Youtube videos on how-to, problem solving, asking for help when needed and not giving up until the work is completed.

CONCLUSION:

I finished the project during the winter break and as we say at ITP, failing is part of learning and success is not about the perfect output of a project, but the process and how you managed it to completion.

Multi-sensory Storytelling in Virtual Reality, Class 2024

Instructor: Winslow Porter

II.

SEND ME AN ANGEL

a VR Halloween multi-sensory experience

Send me an Angel is a short and intense virtual reality experience, where participants are thrust into a nightmarish realm reminiscent of hell to face their own demise. They are transported to a disorienting world where nothing is as it seems. In this bizarre landscape, they encounter their personal guardian angel and are tasked with making choices that will determine their escape from this bewildering place. To prove their worthiness, they must navigate a series of challenging tests and tasks, with no assurances of success or salvation. Send me an Angel is a gripping and immersive journey that challenges participants to rely on their wit and bravery as they seek a way out of this perplexing and surreal world.

Behind the scenes VR Experiences

Anna Nikaki and Cesar Loayza offer onboarding instructions to our classmates.

Storyboard

Elyana Javaheri during our VR experience.

Winslow Porter, our multi-sensory VR instructor, during our VR experience.

Team

Loayza, César (VR developer, concept development, storyboarding, world building - second scene and environment mood, sound, effects)

Ma, Michelle (effects, asset building)

Mereuta, Andriana (concept development, storyboarding, world building - first scene, script, producing - multi-sensory physical elements, documentation)

Nikaki, Anna (concept development, storyboarding, presentation, world building - the pot, AI voice generation)

Blender. Cleaning up the 3D model used in Mixamo as full body rendering, and ‘head only’ for Metahuman.

Reality Capture. Creating the 3D model and rough clean up of extra material captured alongside the model.

Unreal Engine. Mapping the facial features that will be modeled further in Metahuman plugin.

MOCAP

Motion Capture using Motive

Calibrating

+

Suiting Up

+

Recording

TECHNOLOGY

Metahuman. The neck-to-toe part of the body is a pre-recorded avatar using motion capture software and records movement that can then be attributed to other avatars in post.

Ericka Njeumi, my colleague, calibrating the Optitrack cameras to make sure they are all sampled and activated in Motive. Calibrating is done by (1) waving the magic wand in front of all cameras placed around the room, and (2) setting the ground plane setting the Y and X axis.

Adding 41 markers for full body reading and matching to the digital avatar. The T-pose is the start/end of each recording, needed to match the physical plane with the virtual field in Motive and start the recording session.

Caption. Andi’s avatar.

Metahuman. Rendering process of the 3D model and customizing features to make it look like real or an 'alter ego' altogether.

HUMAN INGENUITY

Caption. Andi suited up.

III.

software

a developer’s arsenal of tools and techniques

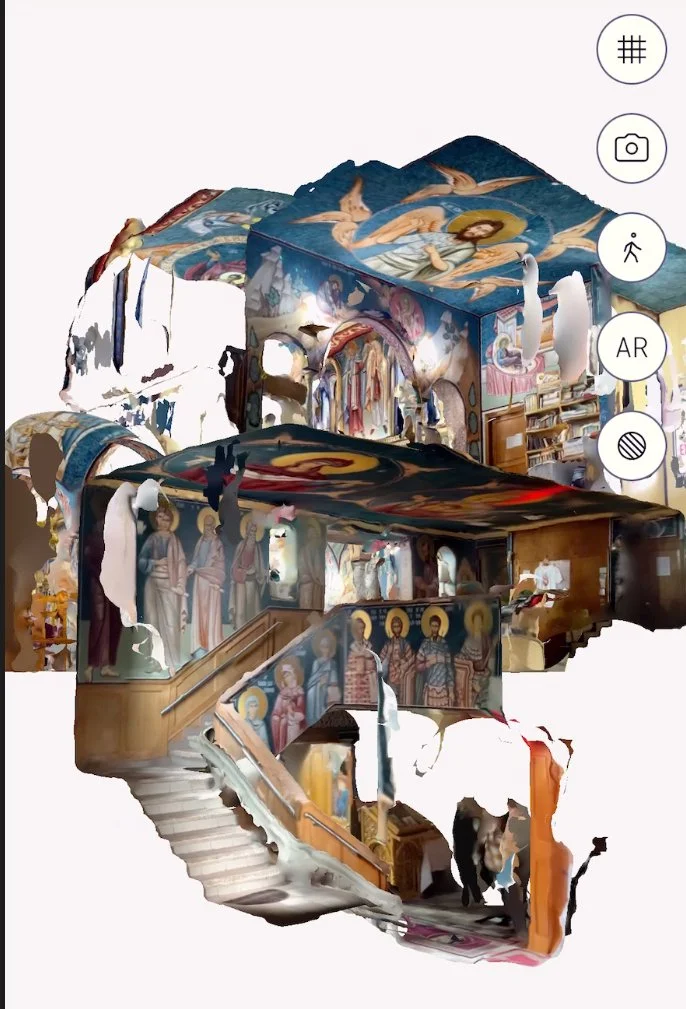

Polycam. A screen recording of a 3D environment scan. The rendering is raw and wasn't yet processed for image accuracy.

Scroll to the bottom of page to see a VR experience using this rendering.

Reality Capture. Close up, 3D scan.

Full body animations using two different platforms.

Unreal Engine. Rendering before exporting to Metahuman.

Digital Camera. Real Person.

Two different methods of 3D scanning, environment vs. person.

Mixamo. 3D avatars that can be selected and matched with different animations from a library of models. In this instance the model is our initial 3D scan to which we added an animation.

Dressing up. No reflective surfaces. Suit, Cap, gloves, shoes, velcro bands for extra fastening support.

Reality vs what is captured in the software. This animation can then be reconfigured and attributed to different characters / avatars.

Sample of a Mocap recording session.

magic

Caption. Andi & Ericka.

Metahuman. Digital Avatar.

3D

asset creation

Scanning

+

Modeling

+

Animation

Photogrammetry. Overview. 3D scanning process.

Photogrammetry. 3D scanning process of a living person. 360 degrees cameras fire at the same time to record a replica of one's body.

IV.

LOVE & CULTURE

a VR archive of various perspectives on love

An archive simulation experience, a 'synthetic architecture' site intended to replicate a museum or an escape room and acting as a container for different stories on the spectrum of human and divine love collected from people representing one culture and yet multifaceted in their accounts by their identities and life experiences.

ABSTRACT

Context: This VR experience is an extension of my research project on love and contains on-camera testimonies of people whom I previously photographed mainly in my home country, the Republic of Moldova.

Project Goals:

I was seeking alternative ways to archiving people stories digitally; testing new ways to enable learning by immersion and interaction, worked to expand on my storytelling tools using symbols, light, elements in the scene that would trigger events based on intuitive cues, as well experimenting with creating environments or using minimal symbolism to transport the visitor into the world of the narrator, sometimes ‘literally’ by bringing in a 3D scan of the church, or scaling the portraits 1:1 so the viewer is able to see the image as if standing in front of that person eye to eye.

Learning Moments: Technically, I have expanded my knowledge in building environments in Unreal Engine, create materials and meshes from scratch, import 3D assets and then manipulate the scene to make the renderings functional, use blueprints to trigger events using boxes or interactive cues, use lights to create moods or cues, add functionality to objects such as grabbing or physical gravity, creating both in regular Unreal environment or VR, as well as learning the platform and its various functions. Creatively, it allowed me to think outside the box and explore new ways of storytelling given that the platform has both an incredible array of possibilities as well as limitations compared to traditional media such as video.

First User Testing Feedback:

make a 3D scan of the priest at the entrance of the church to make it more ‘realistic’;

try Flythrough app as an alternative to Polycam - tried but it seems like it’s more like a video than a 3D environment;

for the intro section - do both voice and text: i.e. what is the experience about, who are the people, what do they do;

change the appearance of an object once one finds him/herself in an area;

give directions;

keep each interview 1 min long (this response varied depending on the person preference);

keep the environments simple - the stories are more important (this response also varied on preference);

implement videos as screens in the existing environment.

Next Steps: This is a developing project and I want to expand on building an extensive digital archive of these interviews from potentially other countries, but also continue user testing sessions to get feedback and gain insights on what other directions this could be improved or extended.

Credit: Jonathan Turner, “Synthetic Architecture” class.